Inside Guardrails AI: A New Framework for Safety, Control and Validation of LLM Applications

The framework is getting a lot of traction within the LLM community.

I recently started an AI-focused educational newsletter, that already has over 160,000 subscribers. TheSequence is a no-BS (meaning no hype, no news, etc) ML-oriented newsletter that takes 5 minutes to read. The goal is to keep you up to date with machine learning projects, research papers, and concepts. Please give it a try by subscribing below:

Safety and control logic are essential constructs to streamline the adoption of LLMs. From compliance, security to basic validators, having a consistent framework to express validation logic is super important in LLM applications. Not surprisingly, there has been a new generation of frameworks emergring in this category. From that group, Guardrails AI seems to be getting quite a bit of attention as a framework to ensure safety and control in LLM apps.

Guardrails AI empowers you to establish and uphold assurance standards for AI applications, encompassing output structuring and quality control. This cutting-edge solution accomplishes this by erecting a firewall-like enclosure, referred to as a “Guard,” around the Large Language Model (LLM) application. Within this Guard, a suite of validators is housed, which can be drawn from our pre-built library or customized to align precisely with your application’s intended function.

Inside Guardrails AI

Guardrails AI stands as a fully open-source library designed to safeguard interactions with Large Language Models (LLMs). Its features include:

— A comprehensive framework for crafting bespoke validators.

— Streamlined orchestration of the prompt → verification → re-prompt process.

— A repository of frequently employed validators for diverse use cases.

— A specification language for communicating precise requirements to the LLM.

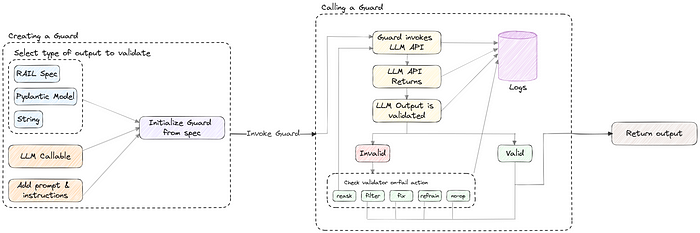

Under the hood, Guardrails introduces two pivotal components:

- Rail: This represents an object definition that enforces specifications on LLM outputs. It utilizes the Reliable AI Markup Language (RAIL) to define structure and type information, validators, and corrective measures for LLM outputs. Rails can be defined in either Pydantic for structured outputs or directly in Python for string outputs.

- Guard: Serving as a lightweight wrapper, the Guard encapsulates LLM API calls, enabling the structuring, validation, and correction of outputs. The Guard object is the central interface for Guardrails AI, fueled by a RailSpec, and entrusted with running the Guardrails AI engine. It handles dynamic prompts, wraps LLM prompts, and meticulously maintains call history.

The following architecture diagram illustrates those components:

Validators play a pivotal role within Guardrails AI. They are instrumental in applying quality controls to schemas articulated in RAIL specs. These validators specify the criteria for evaluating the validity of an output and prescribe actions to rectify deviations from these criteria.

When a validator is applied to a property within a schema, and an output is presented for that schema, whether by encapsulating the LLM call or supplying the LLM output directly, the validators come to life. They scrutinize the property values they are applied to, ensuring adherence to the stipulated standards.

Furthermore, Guardrails AI introduces key elements within RAIL specs:

- Output Element: Enclosed within <output>…</output> tags, this element precisely defines the expected output of the LLM.

- Prompt Element: Nestled within <prompt></prompt> tags, this element encapsulates high-level instructions dispatched to the LLM, delineating the overarching task.

- Instruction Element: Enclosed by <instructions></instructions> tags, this element houses high-level instructions conveyed to the LLM, such as system messages for chat models. Guardrails AI, with its versatile framework and robust architecture, empowers you to harness the potential of AI with enhanced assurance and precision.

Using Guardrails.ai

The process of using Guardrails.ai has two fundamental steps:

- Creating the RAIL spec.

- Incorporating the spec into the code

The development of a RAIL specification is a crucial step in defining the expected structure and types of the LLM output, establishing quality criteria for validating the output, and outlining the necessary corrective measures for handling invalid outputs.

Within the realm of RAIL, we embark on the following actions:

- Request the LLM to generate an object consisting of two key fields: “explanation” and “follow_up_url.”

- For the “explanation” field, we enforce a stringent condition. The generated string must fall within a length range of 200 to 280 characters. Should the explanation fail to meet this valid length criterion, we prompt the LLM to reevaluate.

- The “follow_up_url” field demands that the URL provided is reachable. If the URL fails this accessibility test, it will be promptly filtered out of the response.

Beneath the surface of RAIL version 0.1, our quality criteria and validation rules take shape:

<output>

<object name="bank_run" format="length: 2">

<string

name="explanation"

description="A paragraph about what a bank run is."

format="length: 200 280"

on-fail-length="reask"

/>

<url

name="follow_up_url"

description="A web URL where I can read more about bank runs."

format="valid-url"

on-fail-valid-url="filter"

/>

</object>

</output>Amidst this schema, the framework is established for the expected output, including its structure, constraints, and reactions to deviations from the established norms.

With the RAIL specification in hand, our next stride entails leveraging this blueprint to create a Guard object. This Guard object, acting as a sentinel, envelops the LLM API call and ensures strict adherence to the RAIL specification in its output.

To initiate this process, we call upon the capabilities of Guardrails:

import guardrails as gd

import openai

guard = gd.Guard.from_rail(f.name)

# Wrap the OpenAI API call with the `guard` object

raw_llm_output, validated_output = guard(

openai.Completion.create,

engine="text-davinci-003",

max_tokens=1024,

temperature=0.3

)

print(validated_output)In this way, Guardrails steps into the role of a vigilant protector, safeguarding the integrity and conformity of LLM outputs to the defined RAIL specification.

![MCP Servers [Explained] Python and Agentic AI Tool Integration](https://miro.medium.com/v2/resize:fit:679/1*QXaHCUMHMiHQNH_QG6y5ag.png)